Compare commits

8 Commits

main

...

distribute

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

d6a375ea34 | ||

|

|

a91577319e | ||

|

|

5ff45521c5 | ||

|

|

d62bbff1f0 | ||

|

|

7091378d35 | ||

|

|

70aacb3d75 | ||

|

|

b9f761d256 | ||

|

|

aca1e0698b |

73

.github/workflows/sync-from-gitea-deploy.yml

vendored

Normal file

73

.github/workflows/sync-from-gitea-deploy.yml

vendored

Normal file

@@ -0,0 +1,73 @@

|

|||||||

|

name: Sync from Gitea (distribute→distribute, keep workflow)

|

||||||

|

|

||||||

|

on:

|

||||||

|

schedule:

|

||||||

|

# 2 times per day (UTC): 7:00, 11:00

|

||||||

|

- cron: '0 7,11 * * *'

|

||||||

|

workflow_dispatch: {}

|

||||||

|

|

||||||

|

permissions:

|

||||||

|

contents: write # allow pushing with GITHUB_TOKEN

|

||||||

|

|

||||||

|

jobs:

|

||||||

|

mirror:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

|

||||||

|

steps:

|

||||||

|

- name: Check out GitHub repo

|

||||||

|

uses: actions/checkout@v4

|

||||||

|

with:

|

||||||

|

fetch-depth: 0

|

||||||

|

|

||||||

|

- name: Fetch from Gitea

|

||||||

|

env:

|

||||||

|

GITEA_URL: ${{ secrets.GITEA_URL }}

|

||||||

|

GITEA_USER: ${{ secrets.GITEA_USERNAME }}

|

||||||

|

GITEA_TOKEN: ${{ secrets.GITEA_TOKEN }}

|

||||||

|

run: |

|

||||||

|

# Build authenticated Gitea URL: https://USER:TOKEN@...

|

||||||

|

AUTH_URL="${GITEA_URL/https:\/\//https:\/\/$GITEA_USER:$GITEA_TOKEN@}"

|

||||||

|

|

||||||

|

git remote add gitea "$AUTH_URL"

|

||||||

|

git fetch gitea --prune

|

||||||

|

|

||||||

|

- name: Update distribute from gitea/distribute, keep workflow, and force-push

|

||||||

|

env:

|

||||||

|

GH_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||||

|

GH_REPO: ${{ github.repository }}

|

||||||

|

run: |

|

||||||

|

# Configure identity for commits made by this workflow

|

||||||

|

git config user.name "github-actions[bot]"

|

||||||

|

git config user.email "github-actions[bot]@users.noreply.github.com"

|

||||||

|

|

||||||

|

# Authenticated push URL for GitHub

|

||||||

|

git remote set-url origin "https://x-access-token:${GH_TOKEN}@github.com/${GH_REPO}.git"

|

||||||

|

|

||||||

|

WF_PATH=".github/workflows/sync-from-gitea.yml"

|

||||||

|

|

||||||

|

# If the workflow exists in the current checkout, save a copy

|

||||||

|

if [ -f "$WF_PATH" ]; then

|

||||||

|

mkdir -p /tmp/gh-workflows

|

||||||

|

cp "$WF_PATH" /tmp/gh-workflows/

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Reset local 'distribute' to exactly match gitea/distribute

|

||||||

|

if git show-ref --verify --quiet refs/remotes/gitea/distribute; then

|

||||||

|

git checkout -B distribute gitea/distribute

|

||||||

|

else

|

||||||

|

echo "No gitea/distribute found, nothing to sync."

|

||||||

|

exit 0

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Restore the workflow into the new HEAD and commit if needed

|

||||||

|

if [ -f "/tmp/gh-workflows/sync-from-gitea.yml" ]; then

|

||||||

|

mkdir -p .github/workflows

|

||||||

|

cp /tmp/gh-workflows/sync-from-gitea.yml "$WF_PATH"

|

||||||

|

git add "$WF_PATH"

|

||||||

|

if ! git diff --cached --quiet; then

|

||||||

|

git commit -m "Inject GitHub sync workflow"

|

||||||

|

fi

|

||||||

|

fi

|

||||||

|

|

||||||

|

# Force-push distribute so GitHub mirrors Gitea + workflow

|

||||||

|

git push origin distribute --force

|

||||||

2

.github/workflows/sync-from-gitea.yml

vendored

2

.github/workflows/sync-from-gitea.yml

vendored

@@ -3,7 +3,7 @@ name: Sync from Gitea (main→main, keep workflow)

|

|||||||

on:

|

on:

|

||||||

schedule:

|

schedule:

|

||||||

# 2 times per day (UTC): 7:00, 11:00

|

# 2 times per day (UTC): 7:00, 11:00

|

||||||

- cron: '0 19,23 * * *'

|

- cron: '0 7,11 * * *'

|

||||||

workflow_dispatch: {}

|

workflow_dispatch: {}

|

||||||

|

|

||||||

permissions:

|

permissions:

|

||||||

|

|||||||

@@ -1,23 +1,61 @@

|

|||||||

export default {

|

export default {

|

||||||

index: "Course Description",

|

menu: {

|

||||||

"---":{

|

title: 'Home',

|

||||||

type: 'separator'

|

type: 'menu',

|

||||||

|

items: {

|

||||||

|

index: {

|

||||||

|

title: 'Home',

|

||||||

|

href: '/'

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

title: 'About',

|

||||||

|

href: '/about'

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

title: 'Contact Me',

|

||||||

|

href: '/contact'

|

||||||

|

}

|

||||||

|

},

|

||||||

},

|

},

|

||||||

CSE332S_L1: "Object-Oriented Programming Lab (Lecture 1)",

|

Math3200'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L2: "Object-Oriented Programming Lab (Lecture 2)",

|

Math429'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L3: "Object-Oriented Programming Lab (Lecture 3)",

|

Math4111'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L4: "Object-Oriented Programming Lab (Lecture 4)",

|

Math4121'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L5: "Object-Oriented Programming Lab (Lecture 5)",

|

Math4201'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L6: "Object-Oriented Programming Lab (Lecture 6)",

|

Math416'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L7: "Object-Oriented Programming Lab (Lecture 7)",

|

Math401'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L8: "Object-Oriented Programming Lab (Lecture 8)",

|

CSE332S'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L9: "Object-Oriented Programming Lab (Lecture 9)",

|

CSE347'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L10: "Object-Oriented Programming Lab (Lecture 10)",

|

CSE442T'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L11: "Object-Oriented Programming Lab (Lecture 11)",

|

CSE5313'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L12: "Object-Oriented Programming Lab (Lecture 12)",

|

CSE510'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L13: "Object-Oriented Programming Lab (Lecture 13)",

|

CSE559A'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L14: "Object-Oriented Programming Lab (Lecture 14)",

|

CSE5519'CSE332S_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE332S_L15: "Object-Oriented Programming Lab (Lecture 15)",

|

Swap: {

|

||||||

CSE332S_L16: "Object-Oriented Programming Lab (Lecture 16)",

|

display: 'hidden',

|

||||||

CSE332S_L17: "Object-Oriented Programming Lab (Lecture 17)"

|

theme:{

|

||||||

}

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

index: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -1,18 +1,61 @@

|

|||||||

export default {

|

export default {

|

||||||

index: "Course Description",

|

menu: {

|

||||||

"---":{

|

title: 'Home',

|

||||||

type: 'separator'

|

type: 'menu',

|

||||||

|

items: {

|

||||||

|

index: {

|

||||||

|

title: 'Home',

|

||||||

|

href: '/'

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

title: 'About',

|

||||||

|

href: '/about'

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

title: 'Contact Me',

|

||||||

|

href: '/contact'

|

||||||

|

}

|

||||||

|

},

|

||||||

},

|

},

|

||||||

Exam_reviews: "Exam reviews",

|

Math3200'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L1: "Analysis of Algorithms (Lecture 1)",

|

Math429'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L2: "Analysis of Algorithms (Lecture 2)",

|

Math4111'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L3: "Analysis of Algorithms (Lecture 3)",

|

Math4121'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L4: "Analysis of Algorithms (Lecture 4)",

|

Math4201'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L5: "Analysis of Algorithms (Lecture 5)",

|

Math416'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L6: "Analysis of Algorithms (Lecture 6)",

|

Math401'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L7: "Analysis of Algorithms (Lecture 7)",

|

CSE332S'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L8: "Analysis of Algorithms (Lecture 8)",

|

CSE347'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L9: "Analysis of Algorithms (Lecture 9)",

|

CSE442T'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L10: "Analysis of Algorithms (Lecture 10)",

|

CSE5313'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE347_L11: "Analysis of Algorithms (Lecture 11)"

|

CSE510'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

}

|

CSE559A'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

|

CSE5519'CSE347_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

|

Swap: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

index: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -1,31 +1,61 @@

|

|||||||

export default {

|

export default {

|

||||||

index: "Course Description",

|

menu: {

|

||||||

"---":{

|

title: 'Home',

|

||||||

type: 'separator'

|

type: 'menu',

|

||||||

|

items: {

|

||||||

|

index: {

|

||||||

|

title: 'Home',

|

||||||

|

href: '/'

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

title: 'About',

|

||||||

|

href: '/about'

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

title: 'Contact Me',

|

||||||

|

href: '/contact'

|

||||||

|

}

|

||||||

|

},

|

||||||

},

|

},

|

||||||

Exam_reviews: "Exam reviews",

|

Math3200'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L1: "Introduction to Cryptography (Lecture 1)",

|

Math429'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L2: "Introduction to Cryptography (Lecture 2)",

|

Math4111'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L3: "Introduction to Cryptography (Lecture 3)",

|

Math4121'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L4: "Introduction to Cryptography (Lecture 4)",

|

Math4201'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L5: "Introduction to Cryptography (Lecture 5)",

|

Math416'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L6: "Introduction to Cryptography (Lecture 6)",

|

Math401'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L7: "Introduction to Cryptography (Lecture 7)",

|

CSE332S'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L8: "Introduction to Cryptography (Lecture 8)",

|

CSE347'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L9: "Introduction to Cryptography (Lecture 9)",

|

CSE442T'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L10: "Introduction to Cryptography (Lecture 10)",

|

CSE5313'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L11: "Introduction to Cryptography (Lecture 11)",

|

CSE510'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L12: "Introduction to Cryptography (Lecture 12)",

|

CSE559A'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L13: "Introduction to Cryptography (Lecture 13)",

|

CSE5519'CSE442T_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE442T_L14: "Introduction to Cryptography (Lecture 14)",

|

Swap: {

|

||||||

CSE442T_L15: "Introduction to Cryptography (Lecture 15)",

|

display: 'hidden',

|

||||||

CSE442T_L16: "Introduction to Cryptography (Lecture 16)",

|

theme:{

|

||||||

CSE442T_L17: "Introduction to Cryptography (Lecture 17)",

|

timestamp: true,

|

||||||

CSE442T_L18: "Introduction to Cryptography (Lecture 18)",

|

}

|

||||||

CSE442T_L19: "Introduction to Cryptography (Lecture 19)",

|

},

|

||||||

CSE442T_L20: "Introduction to Cryptography (Lecture 20)",

|

index: {

|

||||||

CSE442T_L21: "Introduction to Cryptography (Lecture 21)",

|

display: 'hidden',

|

||||||

CSE442T_L22: "Introduction to Cryptography (Lecture 22)",

|

theme:{

|

||||||

CSE442T_L23: "Introduction to Cryptography (Lecture 23)",

|

sidebar: false,

|

||||||

CSE442T_L24: "Introduction to Cryptography (Lecture 24)"

|

timestamp: true,

|

||||||

}

|

}

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -90,6 +90,8 @@ Parameter explanations:

|

|||||||

|

|

||||||

IGM makes decentralized execution optimal with respect to the learned factorized value.

|

IGM makes decentralized execution optimal with respect to the learned factorized value.

|

||||||

|

|

||||||

|

## Linear Value Factorization

|

||||||

|

|

||||||

### VDN (Value Decomposition Networks)

|

### VDN (Value Decomposition Networks)

|

||||||

|

|

||||||

VDN assumes:

|

VDN assumes:

|

||||||

|

|||||||

@@ -1,101 +0,0 @@

|

|||||||

# CSE510 Deep Reinforcement Learning (Lecture 25)

|

|

||||||

|

|

||||||

> Restore human intelligence

|

|

||||||

|

|

||||||

## Linear Value Factorization

|

|

||||||

|

|

||||||

[link to paper](https://arxiv.org/abs/2006.00587)

|

|

||||||

|

|

||||||

### Why Linear Factorization works?

|

|

||||||

|

|

||||||

- Multi-agent reinforcement learning are mostly emprical

|

|

||||||

- Theoretical Model: Factored Multi-Agent Fitted Q-Iteration (FMA-FQI)

|

|

||||||

|

|

||||||

#### Theorem 1

|

|

||||||

|

|

||||||

It realize **Counterfactual** credit assignment mechanism.

|

|

||||||

|

|

||||||

Agent $i$:

|

|

||||||

|

|

||||||

$$

|

|

||||||

Q_i^{(t+1)}(s,a_i)=\mathbb{E}_{a_{-i}'}\left[y^{(t)}(s,a_i\oplus a_{-i}')\right]-\frac{n-1}{n}\mathbb{E}_{a'}\left[y^{(t)}(s,a')\right]

|

|

||||||

$$

|

|

||||||

|

|

||||||

Here $\mathbb{E}_{a_{-i}'}\left[y^{(t)}(s,a_i\oplus a_{-i}')\right]$ is the evaluation of $a_i$.

|

|

||||||

|

|

||||||

and $\mathbb{E}_{a'}\left[y^{(t)}(s,a')\right]$ is the baseline

|

|

||||||

|

|

||||||

The target $Q$-value: $y^{(t)}(s,a)=r+\gamma\max_{a'}Q_{tot}^{(t)}(s',a')$

|

|

||||||

|

|

||||||

#### Theorem 2

|

|

||||||

|

|

||||||

it has local convergence with on-policy training

|

|

||||||

|

|

||||||

##### Limitations of Linear Factorization

|

|

||||||

|

|

||||||

Linear: $Q_{tot}(s,a)=\sum_{i=1}^{n}Q_{i}(s,a_i)$

|

|

||||||

|

|

||||||

Limited Representation: Suboptimal (Prisoner's Dilemma)

|

|

||||||

|

|

||||||

|a_2\a_2| Action 1 | Action 2 |

|

|

||||||

|---|---|---|

|

|

||||||

|Action 1| **8** | -12 |

|

|

||||||

|Action 2| -12 | 0 |

|

|

||||||

|

|

||||||

After linear factorization:

|

|

||||||

|

|

||||||

|a_2\a_2| Action 1 | Action 2 |

|

|

||||||

|---|---|---|

|

|

||||||

|Action 1| -6.5 | -5 |

|

|

||||||

|Action 2| -5 | **-3.5** |

|

|

||||||

|

|

||||||

#### Theorem 3

|

|

||||||

|

|

||||||

it may diverge with off-policy training

|

|

||||||

|

|

||||||

### Perfect Alignment: IGM Factorization

|

|

||||||

|

|

||||||

- Individual-Global Maximization (IGM) Constraint

|

|

||||||

|

|

||||||

$$

|

|

||||||

\argmax_{a}Q_{tot}(s,a)=(\argmax_{a_1}Q_1(s,a_1), \dots, \argmax_{a_n}Q_n(s,a_n))

|

|

||||||

$$

|

|

||||||

|

|

||||||

- IGM Factorization: $Q_{tot} (s,a)=f(Q_1(s,a_1), \dots, Q_n(s,a_n))$

|

|

||||||

- Factorization function $f$ realizes all functions satsisfying IGM.

|

|

||||||

|

|

||||||

- FQI-IGM: Fitted Q-Iteration with IGM Factorization

|

|

||||||

|

|

||||||

#### Theorem 4

|

|

||||||

|

|

||||||

Convergence & optimality. FQI-IGM globally converges to the optimal value function in multi-agent MDPs.

|

|

||||||

|

|

||||||

### QPLEX: Multi-Agent Q-Learning with IGM Factorization

|

|

||||||

|

|

||||||

[link to paper](https://arxiv.org/pdf/2008.01062)

|

|

||||||

|

|

||||||

IGM: $\argmax_a Q_{tot}(s,a)=\begin{pamtrix}

|

|

||||||

\argmax_{a_1}Q_1(s,a_1) \\

|

|

||||||

\dots \\

|

|

||||||

\argmax_{a_n}Q_n(s,a_n)

|

|

||||||

\end{pmatrix}

|

|

||||||

$

|

|

||||||

|

|

||||||

Core idea:

|

|

||||||

|

|

||||||

- Fitting well the values of optimal actions

|

|

||||||

- Approximate the values of non-optimal actions

|

|

||||||

|

|

||||||

QPLEX Mixing Network:

|

|

||||||

|

|

||||||

$$

|

|

||||||

Q_{tot}(s,a)=\sum_{i=1}^{n}\max_{a_i'}Q_i(s,a_i')+\sum_{i=1}^{n} \lambda_i(s,a)(Q_i(s,a_i)-\max_{a_i'}Q_i(s,a_i'))

|

|

||||||

$$

|

|

||||||

|

|

||||||

Here $\sum_{i=1}^{n}\max_{a_i'}Q_i(s,a_i')$ is the baseline $\max_a Q_{tot}(s,a)$

|

|

||||||

|

|

||||||

And $Q_i(s,a_i)-\max_{a_i'}Q_i(s,a_i')$ is the "advantage".

|

|

||||||

|

|

||||||

Coefficients: $\lambda_i(s,a)>0$, **easily realized and learned with neural networks**

|

|

||||||

|

|

||||||

> Continue next time...

|

|

||||||

@@ -1,223 +0,0 @@

|

|||||||

# CSE510 Deep Reinforcement Learning (Lecture 26)

|

|

||||||

|

|

||||||

## Continue on Real-World Practical Challenges for RL

|

|

||||||

|

|

||||||

### Factored multi-agent RL

|

|

||||||

|

|

||||||

- Sample efficiency -> Shared Learning

|

|

||||||

- Complexity -> High-Order Factorization

|

|

||||||

- Partial Observability -> Communication Learning

|

|

||||||

- Sparse reward -> Coordinated Exploration

|

|

||||||

|

|

||||||

#### Parameter Sharing vs. Diversity

|

|

||||||

|

|

||||||

- Parameter Sharing is critical for deep MARL methods

|

|

||||||

- However, agents tend to acquire homogenous behaviors

|

|

||||||

- Diversity is essential for exploration and practical tasks

|

|

||||||

|

|

||||||

[link to paper: Google Football](https://arxiv.org/pdf/1907.11180)

|

|

||||||

|

|

||||||

Schematics of Our Approach: Celebrating Diversity in Shared MARL (CDS)

|

|

||||||

|

|

||||||

- In representation, CDS allows MARL to adaptively decide

|

|

||||||

when to share learning

|

|

||||||

- Encouraging Diversity in Optimization

|

|

||||||

|

|

||||||

In optimization, maximizing an information-theoretic objective to achieve identity-aware diversity

|

|

||||||

|

|

||||||

$$

|

|

||||||

\begin{aligned}

|

|

||||||

I^\pi(\tau_T;id)&=H(\tau_t)-H(\tau_T|id)=\mathbbb{E}_{id,\tau_T\sim \pi}\left[\log \frac{p(\tau_T|id)}{p(\tau_T)}\right]\\

|

|

||||||

&= \mathbb{E}_{id,\tau}\left[ \log \frac{p(o_0|id)}{p(o_0)}+\sum_{t=0}^{T-1}\log\frac{a_t|\tau_t,id}{p(a_t|\tau_t)}+\log \frac{p(o_{t+1}|\tau_t,a_t,id)}{p(o_{t+1}|\tau_t,a_t)}\right]

|

|

||||||

\end{aligned}

|

|

||||||

$$

|

|

||||||

|

|

||||||

Here: $\sum_{t=0}^{T-1}\log\frac{a_t|\tau_t,id}{p(a_t|\tau_t)}$ represents the action diversity.

|

|

||||||

|

|

||||||

$\log \frac{p(o_{t+1}|\tau_t,a_t,id)}{p(o_{t+1}|\tau_t,a_t)}$ represents the observation diversity.

|

|

||||||

|

|

||||||

### Summary

|

|

||||||

|

|

||||||

- MARL plays a critical role for AI, but is at the early stage

|

|

||||||

- Value factorization enables scalable MARL

|

|

||||||

- Linear factorization sometimes is surprising effective

|

|

||||||

- Non-linear factorization shows promise in offline settings

|

|

||||||

- Parameter sharing plays an important role for deep MARL

|

|

||||||

- Diversity and dynamic parameter sharing can be critical for complex cooperative tasks

|

|

||||||

|

|

||||||

## Challenges and open problems in DRL

|

|

||||||

|

|

||||||

### Overview for Reinforcement Learning Algorithms

|

|

||||||

|

|

||||||

Recall from lecture 2

|

|

||||||

|

|

||||||

Better sample efficiency to less sample efficiency:

|

|

||||||

|

|

||||||

- Model-based

|

|

||||||

- Off-policy/Q-learning

|

|

||||||

- Actor-critic

|

|

||||||

- On-policy/Policy gradient

|

|

||||||

- Evolutionary/Gradient-free

|

|

||||||

|

|

||||||

#### Model-Based

|

|

||||||

|

|

||||||

- Learn the model of the world, then pan using the model

|

|

||||||

- Update model often

|

|

||||||

- Re-plan often

|

|

||||||

|

|

||||||

#### Value-Based

|

|

||||||

|

|

||||||

- Learn the state or state-action value

|

|

||||||

- Act by choosing best action in state

|

|

||||||

- Exploration is a necessary add-on

|

|

||||||

|

|

||||||

#### Policy-based

|

|

||||||

|

|

||||||

- Learn the stochastic policy function that maps state to action

|

|

||||||

- Act by sampling policy

|

|

||||||

- Exploration is baked in

|

|

||||||

|

|

||||||

### Where we are?

|

|

||||||

|

|

||||||

Deep RL has achieved impressive results in games, robotics, control, and decision systems.

|

|

||||||

|

|

||||||

But it is still far from a general, reliable, and efficient learning paradigm.

|

|

||||||

|

|

||||||

Today: what limits Deep RL, what's being worked on, and what's still open.

|

|

||||||

|

|

||||||

### Outline of challenges

|

|

||||||

|

|

||||||

- Offline RL

|

|

||||||

- Multi-Agent complexity

|

|

||||||

- Sample efficiency & data reuse

|

|

||||||

- Stability & reproducibility

|

|

||||||

- Generalization & distribution shift

|

|

||||||

- Scalable model-based RL

|

|

||||||

- Safety

|

|

||||||

- Theory gaps & evaluation

|

|

||||||

|

|

||||||

### Sample inefficiency

|

|

||||||

|

|

||||||

Model-free Deep RL often need million/billion of steps

|

|

||||||

|

|

||||||

- Humans with 15-minute learning tend to outperform DDQN with 115 hours

|

|

||||||

- OpenAI Five for Dota 2: 180 years playing time per day

|

|

||||||

|

|

||||||

Real-world systems can't afford this

|

|

||||||

|

|

||||||

Root causes: high-variance gradients, weak priors, poor credit assignment.

|

|

||||||

|

|

||||||

Open direction for sample efficiency

|

|

||||||

|

|

||||||

- Better data reuse: off-policy learning & replay improvements

|

|

||||||

- Self-supervised representation learning for control (learning from interacting with the environment)

|

|

||||||

- Hybrid model-based/model-free approaches

|

|

||||||

- Transfer & pre-training on large datasets

|

|

||||||

- Knowledge driving-RL: leveraging pre-trained models

|

|

||||||

|

|

||||||

#### Knowledge-Driven RL: Motivation

|

|

||||||

|

|

||||||

Current LLMs are not good at decision making

|

|

||||||

|

|

||||||

Pros: rich knowledge

|

|

||||||

|

|

||||||

Cons: Auto-regressive decoding lack of long turn memory

|

|

||||||

|

|

||||||

Reinforcement learning in decision making

|

|

||||||

|

|

||||||

Pros: Go beyond human intelligence

|

|

||||||

|

|

||||||

Cons: sample inefficiency

|

|

||||||

|

|

||||||

### Instability & the Deadly triad

|

|

||||||

|

|

||||||

Function approximation + boostraping + off-policy learning can diverge

|

|

||||||

|

|

||||||

Even stable algorithms (PPO) can be unstable

|

|

||||||

|

|

||||||

#### Open direction for Stability

|

|

||||||

|

|

||||||

Better optimization landscapes + regularization

|

|

||||||

|

|

||||||

Calibration/monitoring tools for RL training

|

|

||||||

|

|

||||||

Architectures with built-in inductive biased (e.g., equivariance)

|

|

||||||

|

|

||||||

### Reproducibility & Evaluation

|

|

||||||

|

|

||||||

Results often depend on random seeds, codebase, and compute budget

|

|

||||||

|

|

||||||

Benchmark can be overfit; comparisons apples-to-oranges

|

|

||||||

|

|

||||||

Offline evaluation is especially tricky

|

|

||||||

|

|

||||||

#### Toward Better Evaluation

|

|

||||||

|

|

||||||

- Robustness checks and ablations

|

|

||||||

- Out-of-distribution test suites

|

|

||||||

- Realistic benchmarks beyond games (e.g., science and healthcare)

|

|

||||||

|

|

||||||

### Generalization & Distribution Shift

|

|

||||||

|

|

||||||

Policy overfit to training environments and fail under small challenges

|

|

||||||

|

|

||||||

Sim-to-real gap, sensor noise, morphology changes, domain drift.

|

|

||||||

|

|

||||||

Requires learning invariance and robust decision rules.

|

|

||||||

|

|

||||||

#### Open direction for Generalization

|

|

||||||

|

|

||||||

- Domain randomization + system identification

|

|

||||||

- Robust/ risk-sensitive RL

|

|

||||||

- Representation learning for invariance

|

|

||||||

- Meta-RL and fast adaptation

|

|

||||||

|

|

||||||

### Model-based RL: Promise & Pitfalls

|

|

||||||

|

|

||||||

- Learned models enable planning and sample efficiency

|

|

||||||

- But distribution mismatch and model exploitation can break policies

|

|

||||||

- Long-horizon imagination amplifies errors

|

|

||||||

- Model-learning is challenging

|

|

||||||

|

|

||||||

### Safety, alignment, and constraints

|

|

||||||

|

|

||||||

Reward mis-specification -> unsafe or unintended behavior

|

|

||||||

|

|

||||||

Need to respect constraints: energy, collisions, ethics, regulation

|

|

||||||

|

|

||||||

Exploration itself may be unsafe

|

|

||||||

|

|

||||||

#### Open direction for Safety RL

|

|

||||||

|

|

||||||

- Constraint RL (Lagrangians, CBFs, she)

|

|

||||||

|

|

||||||

### Theory Gaps & Evaluation

|

|

||||||

|

|

||||||

Deep RL lacks strong general guarantees.

|

|

||||||

|

|

||||||

We don't fully understand when/why it works

|

|

||||||

|

|

||||||

Bridging theory and

|

|

||||||

|

|

||||||

#### Promising theory directoins

|

|

||||||

|

|

||||||

Optimization thoery of RL objectives

|

|

||||||

|

|

||||||

Generalization and representation learning bounds

|

|

||||||

|

|

||||||

Finite-sample analysis s

|

|

||||||

|

|

||||||

### Connection to foundation models

|

|

||||||

|

|

||||||

- Pre-training on large scale experience

|

|

||||||

- World models as sequence predictors

|

|

||||||

- RLHF/preference optimization for alignment

|

|

||||||

- Open problems: groundign

|

|

||||||

|

|

||||||

### What to expect in the next 3-5 years

|

|

||||||

|

|

||||||

Unified model-based offline + safe RL stacks

|

|

||||||

|

|

||||||

Large pretrianed decision models

|

|

||||||

|

|

||||||

Deployment in high-stake domains

|

|

||||||

@@ -1,32 +1,61 @@

|

|||||||

export default {

|

export default {

|

||||||

index: "Course Description",

|

menu: {

|

||||||

"---":{

|

title: 'Home',

|

||||||

type: 'separator'

|

type: 'menu',

|

||||||

|

items: {

|

||||||

|

index: {

|

||||||

|

title: 'Home',

|

||||||

|

href: '/'

|

||||||

|

},

|

||||||

|

about: {

|

||||||

|

title: 'About',

|

||||||

|

href: '/about'

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

title: 'Contact Me',

|

||||||

|

href: '/contact'

|

||||||

|

}

|

||||||

|

},

|

||||||

},

|

},

|

||||||

CSE510_L1: "CSE510 Deep Reinforcement Learning (Lecture 1)",

|

Math3200'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L2: "CSE510 Deep Reinforcement Learning (Lecture 2)",

|

Math429'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L3: "CSE510 Deep Reinforcement Learning (Lecture 3)",

|

Math4111'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L4: "CSE510 Deep Reinforcement Learning (Lecture 4)",

|

Math4121'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L5: "CSE510 Deep Reinforcement Learning (Lecture 5)",

|

Math4201'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L6: "CSE510 Deep Reinforcement Learning (Lecture 6)",

|

Math416'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L7: "CSE510 Deep Reinforcement Learning (Lecture 7)",

|

Math401'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L8: "CSE510 Deep Reinforcement Learning (Lecture 8)",

|

CSE332S'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L9: "CSE510 Deep Reinforcement Learning (Lecture 9)",

|

CSE347'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L10: "CSE510 Deep Reinforcement Learning (Lecture 10)",

|

CSE442T'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L11: "CSE510 Deep Reinforcement Learning (Lecture 11)",

|

CSE5313'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L12: "CSE510 Deep Reinforcement Learning (Lecture 12)",

|

CSE510'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L13: "CSE510 Deep Reinforcement Learning (Lecture 13)",

|

CSE559A'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L14: "CSE510 Deep Reinforcement Learning (Lecture 14)",

|

CSE5519'CSE510_link\s*:\s*(\{\s+.+\s+.+)\s+.+\s+.+\s+.+\s+(\},)'

|

||||||

CSE510_L15: "CSE510 Deep Reinforcement Learning (Lecture 15)",

|

Swap: {

|

||||||

CSE510_L16: "CSE510 Deep Reinforcement Learning (Lecture 16)",

|

display: 'hidden',

|

||||||

CSE510_L17: "CSE510 Deep Reinforcement Learning (Lecture 17)",

|

theme:{

|

||||||

CSE510_L18: "CSE510 Deep Reinforcement Learning (Lecture 18)",

|

timestamp: true,

|

||||||

CSE510_L19: "CSE510 Deep Reinforcement Learning (Lecture 19)",

|

}

|

||||||

CSE510_L20: "CSE510 Deep Reinforcement Learning (Lecture 20)",

|

},

|

||||||

CSE510_L21: "CSE510 Deep Reinforcement Learning (Lecture 21)",

|

index: {

|

||||||

CSE510_L22: "CSE510 Deep Reinforcement Learning (Lecture 22)",

|

display: 'hidden',

|

||||||

CSE510_L23: "CSE510 Deep Reinforcement Learning (Lecture 23)",

|

theme:{

|

||||||

CSE510_L24: "CSE510 Deep Reinforcement Learning (Lecture 24)",

|

sidebar: false,

|

||||||

CSE510_L25: "CSE510 Deep Reinforcement Learning (Lecture 25)",

|

timestamp: true,

|

||||||

CSE510_L26: "CSE510 Deep Reinforcement Learning (Lecture 26)",

|

}

|

||||||

}

|

},

|

||||||

|

about: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

},

|

||||||

|

contact: {

|

||||||

|

display: 'hidden',

|

||||||

|

theme:{

|

||||||

|

sidebar: false,

|

||||||

|

timestamp: true,

|

||||||

|

}

|

||||||

|

}

|

||||||

|

}

|

||||||

@@ -140,7 +140,6 @@ $$

|

|||||||

\begin{aligned}

|

\begin{aligned}

|

||||||

H(Y|X=x)&=-\sum_{y\in \mathcal{Y}} \log_2 \frac{1}{Pr(Y=y|X=x)} \\

|

H(Y|X=x)&=-\sum_{y\in \mathcal{Y}} \log_2 \frac{1}{Pr(Y=y|X=x)} \\

|

||||||

&=-\sum_{y\in \mathcal{Y}} Pr(Y=y|X=x) \log_2 Pr(Y=y|X=x) \\

|

&=-\sum_{y\in \mathcal{Y}} Pr(Y=y|X=x) \log_2 Pr(Y=y|X=x) \\

|

||||||

\end{aligned}

|

|

||||||

$$

|

$$

|

||||||

|

|

||||||

The conditional entropy $H(Y|X)$ is defined as:

|

The conditional entropy $H(Y|X)$ is defined as:

|

||||||

@@ -151,7 +150,6 @@ H(Y|X)&=\mathbb{E}_{x\sim X}[H(Y|X=x)] \\

|

|||||||

&=-\sum_{x\in \mathcal{X}} Pr(X=x)H(Y|X=x) \\

|

&=-\sum_{x\in \mathcal{X}} Pr(X=x)H(Y|X=x) \\

|

||||||

&=-\sum_{x\in \mathcal{X}, y\in \mathcal{Y}} Pr(X=x, Y=y) \log_2 Pr(Y=y|X=x) \\

|

&=-\sum_{x\in \mathcal{X}, y\in \mathcal{Y}} Pr(X=x, Y=y) \log_2 Pr(Y=y|X=x) \\

|

||||||

&=-\sum_{x\in \mathcal{X}, y\in \mathcal{Y}} Pr(x)\sum_{y\in \mathcal{Y}} Pr(Y=y|X=x) \log_2 Pr(Y=y|X=x) \\

|

&=-\sum_{x\in \mathcal{X}, y\in \mathcal{Y}} Pr(x)\sum_{y\in \mathcal{Y}} Pr(Y=y|X=x) \log_2 Pr(Y=y|X=x) \\

|

||||||

\end{aligned}

|

|

||||||

$$

|

$$

|

||||||

|

|

||||||

Notes:

|

Notes:

|

||||||

|

|||||||

@@ -196,7 +196,7 @@ $\operatorname{Pr}(s_\mathcal{Z}|m_1, \cdots, m_{t-z}) = \operatorname{Pr}(U_1,

|

|||||||

|

|

||||||

Conclude similarly by the law of total probability.

|

Conclude similarly by the law of total probability.

|

||||||

|

|

||||||

$\operatorname{Pr}(s_\mathcal{Z}|m_1, \cdots, m_{t-z}) = \operatorname{Pr}(s_\mathcal{Z}) \implies I(S_\mathcal{Z}; M_1, \cdots, M_{t-z}) = 0$.

|

$\operatorname{Pr}(s_\mathcal{Z}|m_1, \cdots, m_{t-z}) = \operatorname{Pr}(s_\mathcal{Z}) \implies I(S_\mathcal{Z}; M_1, \cdots, M_{t-z}) = 0.

|

||||||

|

|

||||||

### Conditional mutual information

|

### Conditional mutual information

|

||||||

|

|

||||||

@@ -246,14 +246,14 @@ A: Fix any $\mathcal{T} = \{i_1, \cdots, i_t\} \subseteq [n]$ of size $t$, and l

|

|||||||

$$

|

$$

|

||||||

\begin{aligned}

|

\begin{aligned}

|

||||||

H(M) &= I(M; S_\mathcal{T}) + H(M|S_\mathcal{T}) \text{(by def. of mutual information)}\\

|

H(M) &= I(M; S_\mathcal{T}) + H(M|S_\mathcal{T}) \text{(by def. of mutual information)}\\

|

||||||

&= I(M; S_\mathcal{T}) \text{(since }S_\mathcal{T}\text{ suffice to decode M)}\\

|

&= I(M; S_\mathcal{T}) \text{(since S_\mathcal{T} suffice to decode M)}\\

|

||||||

&= I(M; S_{i_t}, S_\mathcal{Z}) \text{(since }S_\mathcal{T} = S_\mathcal{Z} ∪ S_{i_t})\\

|

&= I(M; S_{i_t}, S_\mathcal{Z}) \text{(since S_\mathcal{T} = S_\mathcal{Z} ∪ S_{i_t})}\\

|

||||||

&= I(M; S_{i_t}|S_\mathcal{Z}) + I(M; S_\mathcal{Z}) \text{(chain rule)}\\

|

&= I(M; S_{i_t}|S_\mathcal{Z}) + I(M; S_\mathcal{Z}) \text{(chain rule)}\\

|

||||||

&= I(M; S_{i_t}|S_\mathcal{Z}) \text{(since }\mathcal{Z}\leq z \text{, it reveals nothing about M)}\\

|

&= I(M; S_{i_t}|S_\mathcal{Z}) \text{(since \mathcal{Z} ≤ z, it reveals nothing about M)}\\

|

||||||

&= I(S_{i_t}; M|S_\mathcal{Z}) \text{(symmetry of mutual information)}\\

|

&= I(S_{i_t}; M|S_\mathcal{Z}) \text{(symmetry of mutual information)}\\

|

||||||

&= H(S_{i_t}|S_\mathcal{Z}) - H(S_{i_t}|M,S_\mathcal{Z}) \text{(def. of conditional mutual information)}\\

|

&= H(S_{i_t}|S_\mathcal{Z}) - H(S_{i_t}|M,S_\mathcal{Z}) \text{(def. of conditional mutual information)}\\

|

||||||

&\leq H(S_{i_t}|S_\mathcal{Z}) \text{(entropy is non-negative)}\\

|

\leq H(S_{i_t}|S_\mathcal{Z}) \text{(entropy is non-negative)}\\

|

||||||

&\leq H(S_{i_t}|S_\mathcal{Z}) \text{(conditioning reduces entropy)} \\

|

\leq H(S_{i_t}|S_\mathcal{Z}) \text{(conditioning reduces entropy). \\

|

||||||

\end{aligned}

|

\end{aligned}

|

||||||

$$

|

$$

|

||||||

|

|

||||||

|

|||||||

@@ -160,14 +160,14 @@ Can we trade the recovery threshold $K$ for a smaller $s$?

|

|||||||

|

|

||||||

#### Construction of Short-Dot codes

|

#### Construction of Short-Dot codes

|

||||||

|

|

||||||

Choose a super-regular matrix $B\in \mathbb{F}^{P\times K}$, where $P$ is the number of worker nodes.

|

Choose a super-regular matrix $B\in \mathbb{F}^{P\time K}$, where $P$ is the number of worker nodes.

|

||||||

|

|

||||||

- A matrix is supper-regular if every square submatrix is invertible.

|

- A matrix is supper-regular if every square submatrix is invertible.

|

||||||

- Lagrange/Cauchy matrix is super-regular (next lecture).

|

- Lagrange/Cauchy matrix is super-regular (next lecture).

|

||||||

|

|

||||||

Create matrix $\tilde{A}$ by stacking some $Z\in \mathbb{F}^{(K-M)\times N}$ below matrix $A$.

|

Create matrix $\tilde{A}$ by stacking some $Z\in \mathbb{F}^{(K-M)\times N}$ below matrix $A$.

|

||||||

|

|

||||||

Let $F=B\cdot \tilde{A}\in \mathbb{F}^{P\times N}$.

|

Let $F=B\dot \tilde{A}\in \mathbb{F}^{P\times N}$.

|

||||||

|

|

||||||

**Short-Dot**: create matrix $F\in \mathbb{F}^{P\times N}$ such that:

|

**Short-Dot**: create matrix $F\in \mathbb{F}^{P\times N}$ such that:

|

||||||

|

|

||||||

|

|||||||

@@ -1,395 +0,0 @@

|

|||||||

# CSE5313 Coding and information theory for data science (Lecture 24)

|

|

||||||

|

|

||||||

## Continue on coded computing

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

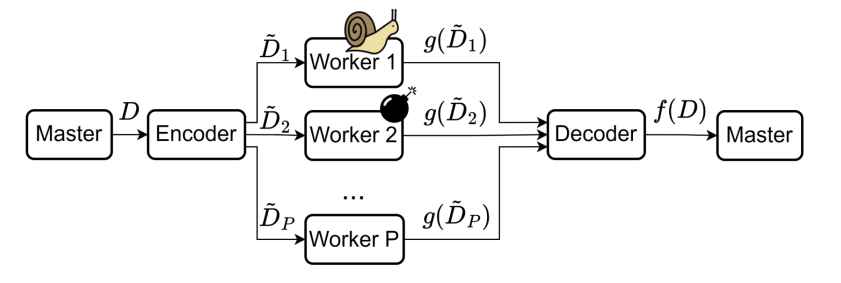

Matrix-vector multiplication: $y=Ax$, where $A\in \mathbb{F}^{M\times N},x\in \mathbb{F}^N$

|

|

||||||

|

|

||||||

- MDS codes.

|

|

||||||

- Recover threshold $K=M$.

|

|

||||||

- Short-dot codes.

|

|

||||||

- Recover threshold $K\geq M$.

|

|

||||||

- Every node receives at most $s=\frac{P-K+M}{P}$. $N$ elements of $x$.

|

|

||||||

|

|

||||||

### Matrix-matrix multiplication

|

|

||||||

|

|

||||||

Problem Formulation:

|

|

||||||

|

|

||||||

- $A=[A_0 A_1\ldots A_{M-1}]\in \mathbb{F}^{L\times L}$, $B=[B_0,B_1,\ldots,B_{M-1}]\in \mathbb{F}^{L\times L}$

|

|

||||||

- $A_m,B_m$ are submatrices of $A,B$.

|

|

||||||

- We want to compute $C=A^\top B$.

|

|

||||||

|

|

||||||

Trivial solution:

|

|

||||||

|

|

||||||

- Index each worker node by $m,n\in [0,M-1]$.

|

|

||||||

- Worker node $(m,n)$ performs matrix multiplication $A_m^\top\cdot B_n$.

|

|

||||||

- Need $P=M^2$ nodes.

|

|

||||||

- No erasure tolerance.

|

|

||||||

|

|

||||||

Can we do better?

|

|

||||||

|

|

||||||

#### 1-D MDS Method

|

|

||||||

|

|

||||||

Create $[\tilde{A}_0,\tilde{A}_1,\ldots,\tilde{A}_{S-1}]$ by encoding $[A_0,A_1,\ldots,A_{M-1}]$. with some $(S,M)$ MDS code.

|

|

||||||

|

|

||||||

Need $P=SM$ worker nodes, and index each one by $s\in [0,S-1], n\in [0,M-1]$.

|

|

||||||

|

|

||||||

Worker node $(s,n)$ performs matrix multiplication $\tilde{A}_s^\top\cdot B_n$.

|

|

||||||

|

|

||||||

$$

|

|

||||||

\begin{bmatrix}

|

|

||||||

A_0^\top\\

|

|

||||||

A_1^\top\\

|

|

||||||

A_0^\top+A_1^\top

|

|

||||||

\end{bmatrix}

|

|

||||||

\begin{bmatrix}

|

|

||||||

B_0 & B_1

|

|

||||||

\end{bmatrix}

|

|

||||||

$$

|

|

||||||

|

|

||||||

Need $S-M$ responses from each column.

|

|

||||||

|

|

||||||

The recovery threshold $K=P-S+M$ nodes.

|

|

||||||

|

|

||||||

This is trivially parity check code with 1 recovery threshold.

|

|

||||||

|

|

||||||

#### 2-D MDS Method

|

|

||||||

|

|

||||||

Encode $[A_0,A_1,\ldots,A_{M-1}]$ with some $(S,M)$ MDS code.

|

|

||||||

|

|

||||||

Encode $[B_0,B_1,\ldots,B_{M-1}]$ with some $(S,M)$ MDS code.

|

|

||||||

|

|

||||||

Need $P=S^2$ nodes.

|

|

||||||

|

|

||||||

$$

|

|

||||||

\begin{bmatrix}

|

|

||||||

A_0^\top\\

|

|

||||||

A_1^\top\\

|

|

||||||

A_0^\top+A_1^\top

|

|

||||||

\end{bmatrix}

|

|

||||||

\begin{bmatrix}

|

|

||||||

B_0 & B_1 & B_0+B_1

|

|

||||||

\end{bmatrix}

|

|

||||||

$$

|

|

||||||

|

|

||||||

Decodability depends on the pattern.

|

|

||||||

|

|

||||||

- Consider an $S\times S$ bipartite graph (rows on left, columns on right).

|

|

||||||

- Draw an $(i,j)$ edge if $\tilde{A}_i^\top\cdot \tilde{B}_j$ is missing

|

|

||||||

- Row $i$ is decodable if and only if the degree of $i$'th left node $\leq S-M$.

|

|

||||||

- Column $j$ is decodable if and only if the degree of $j$'th right node $\leq S-M$.

|

|

||||||

|

|

||||||

Peeling algorithm:

|

|

||||||

|

|

||||||

- Traverse the graph.

|

|

||||||

- If $\exists v$,$\deg v\leq S-M$, remove edges.

|

|

||||||

- Repeat.

|

|

||||||

|

|

||||||

Corollary:

|

|

||||||

|

|

||||||

- A pattern is decodable if and only if the above graph **does not** contain a subgraph with all degree larger than $S-M$.

|

|

||||||

|

|

||||||

> [!NOTE]

|

|

||||||

>

|

|

||||||

> 1. $K_{1D-MDS}=P-S+M=\Theta(P)$ (linearly)

|

|

||||||

> 2. $K_{2D-MDS}=P-(S-M+1)^2+1$.

|

|

||||||

> - Consider $S\times S$ bipartite graph with $(S-M+1)\times (S-M+1)$ complete subgraph.

|

|

||||||

> - There exists subgraph with all degrees larger than $S-M\implies$ not decodable.

|

|

||||||

> - On the other hand: Fewer than $(S-M+1)^2$ edges cannot form a subgraph with all degrees $>S-M$.

|

|

||||||

> - $K$ scales sub-linearly with $P$.

|

|

||||||

> 3. $K_{product}<P-M^2=S^2-M^2=\Theta(\sqrt{P})$

|

|

||||||

>

|

|

||||||

> Our goal is to get rid of $P$.

|

|

||||||

|

|

||||||

### Polynomial codes

|

|

||||||

|

|

||||||

#### Polynomial representation

|

|

||||||

|

|

||||||

Coefficient representation of a polynomial:

|

|

||||||

|

|

||||||

- $f(x)=f_dx^d+f_{d-1}x^{d-1}+\cdots+f_1x+f_0$

|

|

||||||

- Uniquely defined by coefficients $[f_d,f_{d-1},\ldots,f_0]$.

|

|

||||||

|

|

||||||

Value presentation of a polynomial:

|

|

||||||

|

|

||||||

- Theorem: A polynomial of degree $d$ is uniquely determined by $d+1$ points.

|

|

||||||

- Proof Outline: First create a polynomial of degree $d$ from the $d+1$ points using Lagrange interpolation, and show such polynomial is unique.

|

|

||||||

- Uniquely defined by evaluations $[(\alpha_1,f(\alpha_1)),\ldots,(\alpha_{d},f(\alpha_{d}))]$

|

|

||||||

|

|

||||||

Why should we want value representation?

|

|

||||||

|

|

||||||

- With coefficient representation, polynomial product takes $O(d^2)$ multiplications.

|

|

||||||

- With value representation, polynomial product takes $2d+1$ multiplications.

|

|

||||||

|

|

||||||

#### Definition of a polynomial code

|

|

||||||

|

|

||||||

[link to paper](https://arxiv.org/pdf/1705.10464)

|

|

||||||

|

|

||||||

Problem formulation:

|

|

||||||

|

|

||||||

$$

|

|

||||||

A=[A_0,A_1,\ldots,A_{M-1}]\in \mathbb{F}^{L\times L}, B=[B_0,B_1,\ldots,B_{M-1}]\in \mathbb{F}^{L\times L}

|

|

||||||

$$

|

|

||||||

|

|

||||||

We want to compute $C=A^\top B$.

|

|

||||||

|

|

||||||

Define *matrix* polynomials:

|

|

||||||

|

|

||||||

$p_A(x)=\sum_{i=0}^{M-1} A_i x^i$, degree $M-1$

|

|

||||||

|

|

||||||

$p_B(x)=\sum_{i=0}^{M-1} B_i x^{iM}$, degree $M(M-1)$

|

|

||||||

|

|

||||||

where each $A_i,B_i$ are matrices

|

|

||||||

|

|

||||||

We have

|

|

||||||

|

|

||||||

$$

|

|

||||||

h(x)=p_A(x)p_B(x)=\sum_{i=0}^{M-1}\sum_{j=0}^{M-1} A_i B_j x^{i+jM}

|

|

||||||

$$

|

|

||||||

|

|

||||||

$\deg h(x)\leq M(M-1)+M-1=M^2-1$

|

|

||||||

|

|

||||||

Observe that

|

|

||||||

|

|

||||||

$$

|

|

||||||

x^{i_1+j_1M}=x^{i_2+j_2M}

|

|

||||||

$$

|

|

||||||

if and only if $m_1=n_1$ and $m_2=n_2$.

|

|

||||||

|

|

||||||

The coefficient of $x^{i+jM}$ is $A_i^\top B_j$.

|

|

||||||

|

|

||||||

Computing $C=A^\top B$ is equivalent to find the coefficient representation of $h(x)$.

|

|

||||||

|

|

||||||

#### Encoding of polynomial codes

|

|

||||||

|

|

||||||

The master choose $\omega_0,\omega_1,\ldots,\omega_{P-1}\in \mathbb{F}$.

|

|

||||||

|

|

||||||

- Note that this requires $|\mathbb{F}|\geq P$.

|

|

||||||

|

|

||||||

For every node $i\in [0,P-1]$, the master computes $\tilde{A}_i=p_A(\omega_i)$

|

|

||||||

|

|

||||||

- Equivalent to multiplying $[A_0^\top,A_1^\top,\ldots,A_{M-1}^\top]$ by Vandermonde matrix over $\omega_0,\omega_1,\ldots,\omega_{P-1}$.

|

|

||||||

- Can be speed up using FFT.

|

|

||||||

|

|

||||||

Similarly, the master computes $\tilde{B}_i=p_B(\omega_i)$ for every node $i\in [0,P-1]$.

|

|

||||||

|

|

||||||

Every node $i\in [0,P-1]$ computes and returns $c_i=p_A(\omega_i)p_B(\omega_i)$ to the master.

|

|

||||||

|

|

||||||

$c_i$ is the evaluation of polynomial $h(x)=p_A(x)p_B(x)$ at $\omega_i$.

|

|

||||||

|

|

||||||

Recall that $h(x)=\sum_{i=0}^{M-1}\sum_{j=0}^{M-1} A_i^\top B_j x^{i+jM}$.

|

|

||||||

|

|

||||||

- Computing $C=A^\top B$ is equivalent to finding the coefficient representation of $h(x)$.

|

|

||||||

|

|

||||||

Recall that a polynomial of degree $d$ can be uniquely defined by $d+1$ points.

|

|

||||||

|

|

||||||

- With $MN$ evaluations of $h(x)$, we can recover the coefficient representation for polynomial $h(x)$.

|

|

||||||

|

|

||||||

The recovery threshold $K=M^2$, independent of $P$, the number of worker nodes.

|

|

||||||

|

|

||||||

Done.

|

|

||||||

|

|

||||||

### MatDot Codes

|

|

||||||

|

|

||||||

[link to paper](https://arxiv.org/pdf/1801.10292)

|

|

||||||

|

|

||||||

Problem formulation:

|

|

||||||

|

|

||||||

- We want to compute $C=A^\top B$.

|

|

||||||

- Unlike polynomial codes, we let $A=\begin{bmatrix}

|

|

||||||

A_0\\

|

|

||||||

A_1\\

|

|

||||||

\vdots\\

|

|

||||||

A_{M-1}

|

|

||||||

\end{bmatrix}$ and $B=\begin{bmatrix}

|

|

||||||

B_0\\

|

|

||||||

B_1\\

|

|

||||||

\vdots\\

|

|

||||||

B_{M-1}

|

|

||||||

\end{bmatrix}$. And $A,B\in \mathbb{F}^{L\times L}$.

|

|

||||||

|

|

||||||

- In polynomial codes, $A=\begin{bmatrix}

|

|

||||||

A_0 A_1\ldots A_{M-1}

|

|

||||||

\end{bmatrix}$ and $B=\begin{bmatrix}

|

|

||||||

B_0 B_1\ldots B_{M-1}

|

|

||||||

\end{bmatrix}$.

|

|

||||||

|

|

||||||

Key observation:

|

|

||||||

|

|

||||||

$A_m^\top$ is an $L\times \frac{L}{M}$ matrix, and $B_m$ is an $\frac{L}{M}\times L$ matrix. Hence, $A_m^\top B_m$ is an $L\times L$ matrix.

|

|

||||||

|

|

||||||

Let $C=A^\top B=\sum_{m=0}^{M-1} A_m^\top B_m$.

|

|

||||||

|

|

||||||

Let $p_A(x)=\sum_{m=0}^{M-1} A_m x^m$, degree $M-1$.

|

|

||||||

|

|

||||||

Let $p_B(x)=\sum_{m=0}^{M-1} B_m x^m$, degree $M-1$.

|

|

||||||

|

|

||||||

Both have degree $M-1$.

|

|

||||||

|

|

||||||

And $h(x)=p_A(x)p_B(x)$.

|

|

||||||

|

|

||||||

$\deg h(x)\leq M-1+M-1=2M-2$

|

|

||||||

|

|

||||||

Key observation:

|

|

||||||

|

|

||||||

- The coefficient of the term $x^{M-1}$ in $h(x)$ is $\sum_{m=0}^{M-1} A_m^\top B_m$.

|

|

||||||

|

|

||||||

Recall that $C=A^\top B=\sum_{m=0}^{M-1} A_m^\top B_m$.

|

|

||||||

|

|

||||||

Finding this coefficient is equivalent to finding the result of $A^\top B$.

|

|

||||||

|

|

||||||

> Here we sacrifice the bandwidth of the network for the computational power.

|

|

||||||

|

|

||||||

#### General Scheme for MatDot Codes

|

|

||||||

|

|

||||||

The master choose $\omega_0,\omega_1,\ldots,\omega_{P-1}\in \mathbb{F}$.

|

|

||||||

|

|

||||||

- Note that this requires $|\mathbb{F}|\geq P$.

|

|

||||||

|

|

||||||

For every node $i\in [0,P-1]$, the master computes $\tilde{A}_i=p_A(\omega_i)$ and $\tilde{B}_i=p_B(\omega_i)$.

|

|

||||||

|

|

||||||

- $p_A(x)=\sum_{m=0}^{M-1} A_m x^m$, degree $M-1$.

|

|

||||||

- $p_B(x)=\sum_{m=0}^{M-1} B_m x^m$, degree $M-1$.

|

|

||||||

|

|

||||||

The master sends $\tilde{A}_i,\tilde{B}_i$ to node $i$.

|

|

||||||

|

|

||||||

Every node $i\in [0,P-1]$ computes and returns $c_i=p_A(\omega_i)p_B(\omega_i)$ to the master.

|

|

||||||

|

|

||||||

The master needs $\deg h(x)+1=2M-1$ evaluations to obtain $h(x)$.

|

|

||||||

|

|

||||||

- The recovery threshold is $K=2M-1$

|

|

||||||

|

|

||||||

### Recap on Matrix-Matrix multiplication

|

|

||||||

|

|

||||||

$A,B\in \mathbb{F}^{L\times L}$, we want to compute $C=A^\top B$ with $P$ nodes.

|

|

||||||

|

|

||||||

Every node receives $\frac{1}{m}$ of $A$ and $\frac{1}{m}$ of $B$.

|

|

||||||

|

|

||||||

|Code| Recovery threshold $K$|

|

|

||||||

|:--:|:--:|

|

|

||||||

|1D-MDS| $\Theta(P)$ |

|

|

||||||

|2D-MDS| $\leq \Theta(\sqrt{P})$ |

|

|

||||||

|Polynomial codes| $\Theta(M^2)$ |

|

|

||||||

|MatDot codes| $\Theta(M)$ |

|

|

||||||

|

|

||||||

## Polynomial Evaluation

|

|

||||||

|

|

||||||

Problem formulation:

|

|

||||||

|

|

||||||

- We have $K$ datasets $X_1,X_2,\ldots,X_K$.

|

|

||||||

- Want to compute some polynomial function $f$ of degree $d$ on each dataset.

|

|

||||||

- Want $f(X_1),f(X_2),\ldots,f(X_K)$.

|

|

||||||

- Examples:

|

|

||||||

- $X_1,X_2,\ldots,X_K$ are points in $\mathbb{F}^{M\times M}$, and $f(X)=X^8+3X^2+1$.

|

|

||||||

- $X_k=(X_k^{(1)},X_k^{(2)})$, both in $\mathbb{F}^{M\times M}$, and $f(X)=X_k^{(1)}X_k^{(2)}$.

|

|

||||||

- Gradient computation.

|

|

||||||

|

|

||||||

$P$ worker nodes:

|

|

||||||

|

|

||||||

- Some are stragglers, i.e., not responsive.

|

|

||||||

- Some are adversaries, i.e., return erroneous results.

|

|

||||||

- Privacy: We do not want to expose datasets to worker nodes.

|

|

||||||

|

|

||||||

### Replication code

|

|

||||||

|

|

||||||

Suppose $P=(r+1)\cdot K$.

|

|

||||||

|

|

||||||

- Partition the $P$ nodes to $K$ groups of size $r+1$ each.

|

|

||||||

- Node in group $i$ computes and returns $f(X_i)$ to the master.

|

|

||||||

- Replication tolerates $r$ stragglers, or $\lfloor \frac{r}{2} \rfloor$ adversaries.

|

|

||||||

|

|

||||||

### Linear codes

|

|

||||||

|

|

||||||

Recall previous linear computations (matrix-vector):

|

|

||||||

|

|

||||||

- $[\tilde{A}_1,\tilde{A}_2,\tilde{A}_3]=[A_1,A_2,A_1+A_2]$ is the corresponding codeword of $[A_1,A_2]$.

|

|

||||||

- Every worker node $i$ computes $f(\tilde{A}_i)=\tilde{A}_i x$.

|

|

||||||

- $[\tilde{A}_1x, \tilde{A}_2x, \tilde{A}_3x]=[A_1x,A_2x,A_1x+A_2x]$ is the corresponding codeword of $[A_1x,A_2x]$.

|

|

||||||

- This enables to decode $[A_1x,A_2x]$ from $[\tilde{A}_1x,\tilde{A}_2x,\tilde{A}_3 x]$.

|

|

||||||

|

|

||||||

However, $f$ is a **polynomial of degree $d$**, not a linear transformation unless $d=1$.

|

|

||||||

|

|

||||||

- $f(cX)\neq cf(X)$, where $c$ is a constant.

|

|

||||||

- $f(X_1+X_2)\neq f(X_1)+f(X_2)$.

|

|

||||||

|

|

||||||

> [!CAUTION]

|

|

||||||

>

|

|

||||||

> $[f(\tilde{X}_1),f(\tilde{X}_2),\ldots,f(\tilde{X}_K)]$ is not the codeword corresponding to $[f(X_1),f(X_2),\ldots,f(X_K)]$ in any linear code.

|

|

||||||

|

|

||||||

Our goal is to create an encoder/decode such that:

|

|

||||||

|

|

||||||

- Linear encoding: is the codeword of $[X_1,X_2,\ldots,X_K]$ for some linear code.

|

|

||||||

- i.e., $[\tilde{X}_1,\tilde{X}_2,\ldots,\tilde{X}_K]=[X_1,X_2,\ldots,X_K]G$ for some generator matrix $G$.

|

|

||||||

- Every $\tilde{X}_i$ is some linear combination of $X_1,\ldots,X_K$.

|

|

||||||

- The $f(X_i)$ are decodable from some subset of $f(\tilde{X}_i)$'s.

|

|

||||||

- Some of coded results are missing, erroneous.

|

|

||||||

- $X_i$'s are kept private.

|

|

||||||

|

|

||||||

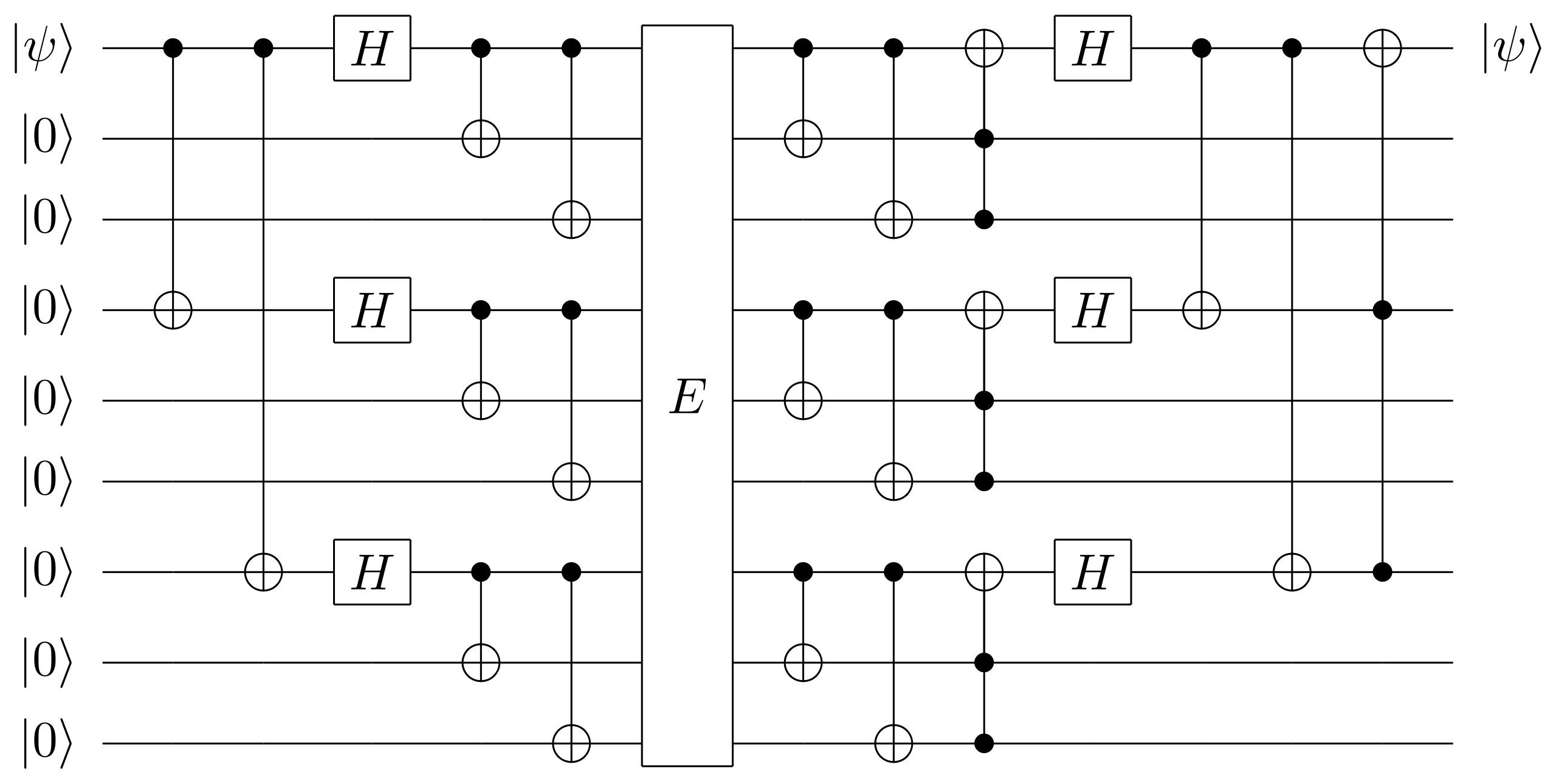

### Lagrange Coded Computing

|

|

||||||

|

|

||||||

Let $\ell(z)$ be a polynomial whose evaluations at $\omega_1,\ldots,\omega_{K}$ are $X_1,\ldots,X_K$.

|

|

||||||

|

|

||||||

- That is, $\ell(\omega_i)=X_i$ for every $\omega_i\in \mathbb{F}, i\in [K]$.

|

|

||||||

|

|

||||||

Some example constructions:

|

|

||||||

|

|

||||||

Given $X_1,\ldots,X_K$ with corresponding $\omega_1,\ldots,\omega_K$

|

|

||||||

|

|

||||||

- $\ell(z)=\sum_{i=1}^K X_iL_i(z)$, where $L_i(z)=\prod_{j\in[K],j\neq i} \frac{z-\omega_j}{\omega_i-\omega_j}=\begin{cases} 0 & \text{if } j\neq i \\ 1 & \text{if } j=i \end{cases}$.

|

|

||||||

|

|

||||||

Then every $f(X_i)=f(\ell(\omega_i))$ is an evaluation of polynomial $f\circ \ell(z)$ at $\omega_i$.

|

|

||||||

|

|

||||||

If the master obtains the composition $h=f\circ \ell$, it can obtain every $f(X_i)=h(\omega_i)$.

|

|

||||||

|

|

||||||

Goal: The master wished to obtain the polynomial $h(z)=f(\ell(z))$.

|

|

||||||

|

|

||||||

Intuition:

|

|

||||||

|

|

||||||

- Encoding is performed by evaluating $\ell(z)$ at $\alpha_1,\ldots,\alpha_P\in \mathbb{F}$, and $P>K$ for redundancy.

|

|

||||||

- Nodes apply $f$ on an evaluation of $\ell$ and obtain an evaluation of $h$.

|

|

||||||

- The master receives some potentially noisy evaluations, and finds $h$.

|

|

||||||

- The master evaluates $h$ at $\omega_1,\ldots,\omega_K$ to obtain $f(X_1),\ldots,f(X_K)$.

|

|

||||||

|

|

||||||

### Encoding for Lagrange coded computing

|

|

||||||

|

|

||||||

Need polynomial $\ell(z)$ such that:

|

|

||||||

|

|

||||||

- $X_k=\ell(\omega_k)$ for every $k\in [K]$.

|

|

||||||

|

|

||||||

Having obtained such $\ell$ we let $\tilde{X}_i=\ell(\alpha_i)$ for every $i\in [P]$.

|

|

||||||

|

|

||||||

$span{\tilde{X}_1,\tilde{X}_2,\ldots,\tilde{X}_P}=span{\ell_1(x),\ell_2(x),\ldots,\ell_P(x)}$.

|

|

||||||

|

|

||||||

Want $X_k=\ell(\omega_k)$ for every $k\in [K]$.

|

|

||||||

|

|

||||||

Tool: Lagrange interpolation.

|

|

||||||

|

|

||||||

- $\ell_k(z)=\prod_{i\neq k} \frac{z-\omega_j}{\omega_k-\omega_j}$.

|

|

||||||

- $\ell_k(\omega_k)=1$ and $\ell_k(\omega_k)=0$ for every $j\neq k$.

|

|

||||||

- $\deg \ell_k(z)=K-1$.

|

|

||||||

|

|

||||||

Let $\ell(z)=\sum_{k=1}^K X_k\ell_k(z)$.

|

|

||||||

|

|

||||||

- $\deg \ell\leq K-1$.

|

|

||||||

- $\ell(\omega_k)=X_k$ for every $k\in [K]$.

|

|

||||||

|

|

||||||

Let $\tilde{X}_i=\ell(\alpha_i)=\sum_{k=1}^K X_k\ell_k(\alpha_i)$.

|

|

||||||

|

|

||||||

Every $\tilde{X}_i$ is a **linear combination** of $X_1,\ldots,X_K$.

|

|

||||||

|

|

||||||

$$

|

|

||||||

(\tilde{X}_1,\tilde{X}_2,\ldots,\tilde{X}_P)=(X_1,\ldots,X_K)\cdot G=(X_1,\ldots,X_K)\begin{bmatrix}

|

|

||||||

\ell_1(\alpha_1) & \ell_1(\alpha_2) & \cdots & \ell_1(\alpha_P) \\

|

|

||||||

\ell_2(\alpha_1) & \ell_2(\alpha_2) & \cdots & \ell_2(\alpha_P) \\

|

|

||||||

\vdots & \vdots & \ddots & \vdots \\

|

|

||||||

\ell_K(\alpha_1) & \ell_K(\alpha_2) & \cdots & \ell_K(\alpha_P)

|

|

||||||

\end{bmatrix}

|

|

||||||

$$

|

|

||||||

|

|

||||||

This $G$ is called a **Lagrange matrix** with respect to

|

|

||||||

|

|

||||||